AI Prompting Essentials

We've all been there—trying to get a model to deliver the perfect response, only to end up with something less than satisfying. The trick? It's all in the prompts. Today, we’re diving into the fascinating world of prompting and how mastering this art can boost your AI communication to new heights 🚀.

1. Understanding Prompts

At its core, a prompt is the instruction or input given to an AI model to generate text. Think of it as good communication—tell the AI what you want clearly and concisely, and with a bit of context. Whether it's a simple question or a detailed task, crafting an effective prompt can significantly enhance the quality and relevance of the model's response.

- Examples of Effective Prompts

Basic Prompt: "Describe the weather today."

Output: "The weather today is sunny with a few clouds and a gentle breeze."

Detailed Prompt: "Describe the weather in New York City today, mentioning the temperature, humidity, and any significant weather events."

Output: "In New York City today, the weather is sunny with a high of 75°F, humidity at 60%, and no significant weather events."

Adding context and details leads to better outputs. This is the essence of prompt engineering, where you optimize prompts for specific tasks to get the most relevant and accurate results.

Research Insight

According to a study by OpenAI, detailed prompts increase response relevance by up to 30%. [OpenAI Research Paper, 2023]

2. Basics of Prompt Formatting

Formatting your prompt is crucial for clarity. Use bullet points or numbered lists to structure your instructions. And don't forget to proofread—tools like Grammarly can save you from those annoying typos! 😅

Tools to Assist

- Anthropic's Generate a Prompt: Helps in crafting more detailed and clear prompts.

- Cohere’s Prompt Tuner: Optimizes your initial prompts for better outcomes.

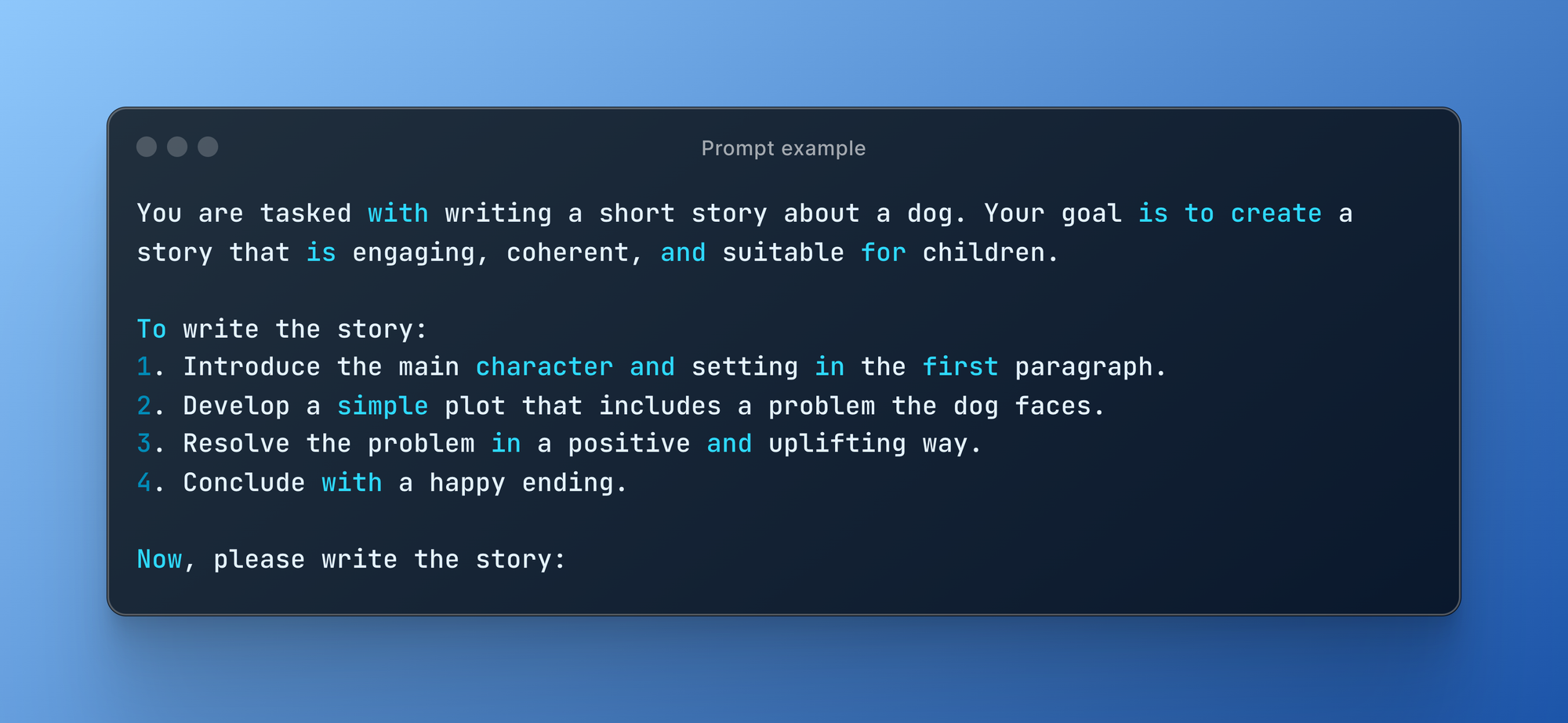

Example Transformation

Initial Prompt: "Write a story about a dog."

Optimized Prompt:

3. Advanced Prompting Techniques

Advanced prompting isn’t about complex tricks—it’s about clear communication and strategic iterations. Here are some key techniques and research-backed insights to help you refine your prompts.

Zero-shot Prompting

Provide a direct instruction without examples.

Prompt: "Translate the following sentence into Spanish: 'Good morning, how are you?'"

Output: "Buenos días, ¿cómo estás?"

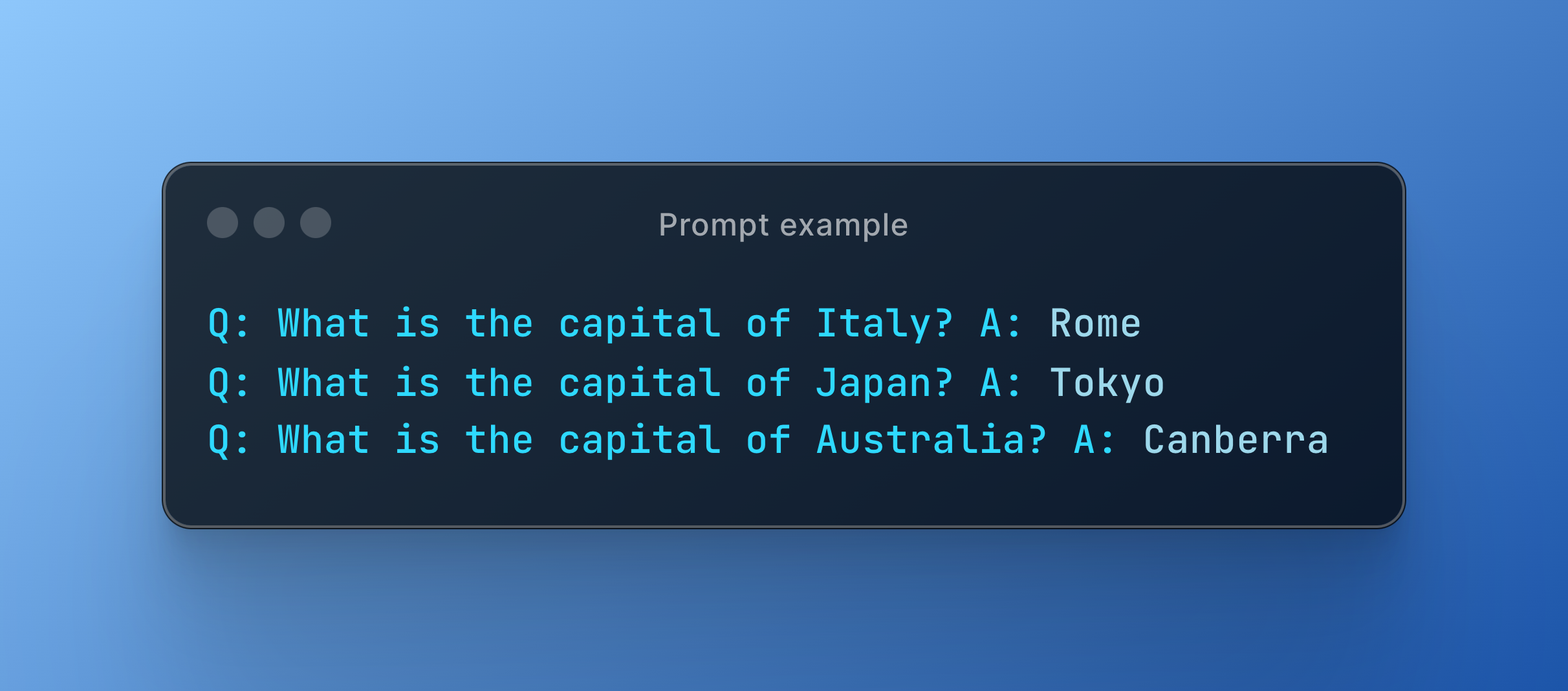

Few-shot Prompting

Provide a few examples to guide the model better.

Format: "Q: ? A: "

Example:

Chain-of-Thought Prompting

Encourage the model to break down its reasoning steps.

Prompt: "Explain the process of photosynthesis step by step."

Output: "First, plants take in carbon dioxide from the air and water from the soil. Next, using sunlight, they convert these into glucose and oxygen. Finally, the glucose is used as energy, and the oxygen is released into the air."

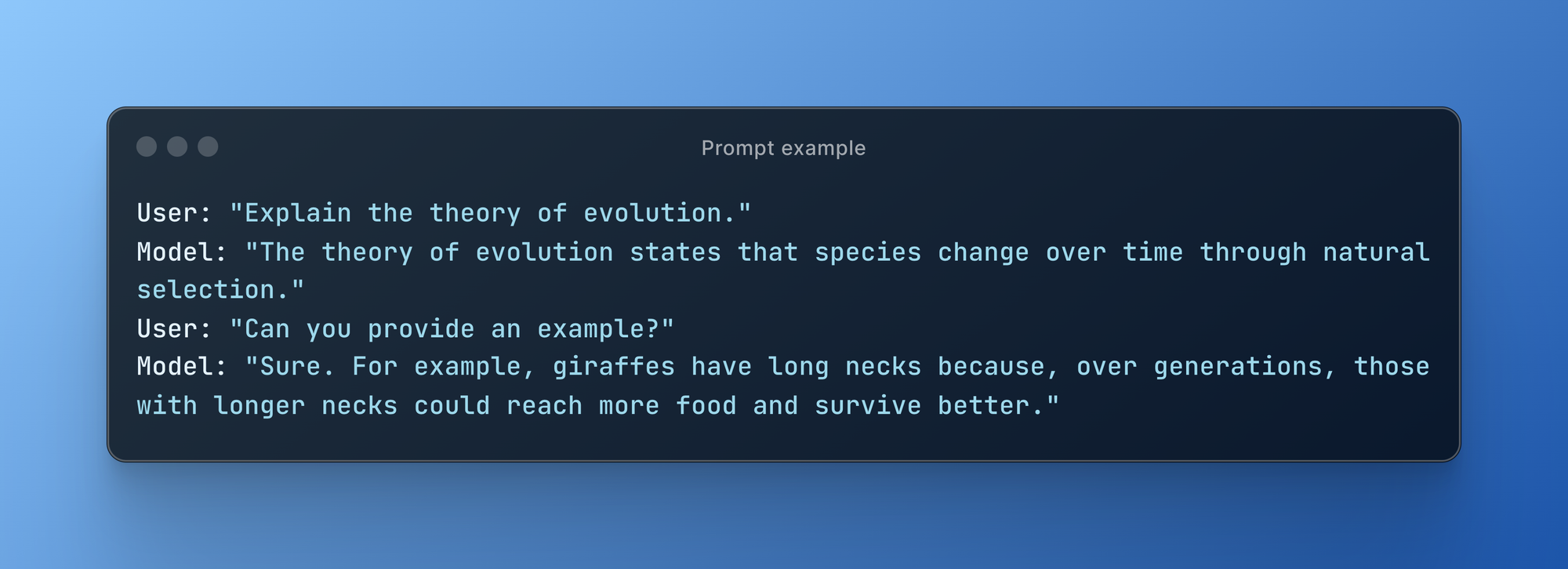

Chain Prompting

Iterate with the model to refine its answers.

Example:

Retrieval-Augmented Generation (RAG)

Retrieve relevant information before prompting.

Prompt: "Here is some context about climate change. Based on this information, explain the effects of climate change on polar bears."

Example with Web Search

Prompt: "What is the latest development in quantum computing? Use the web search tool before answering.”

4. Output Optimization

To improve the quality and structure of outputs, consider these methods:

Adjust the Temperature Parameter

Control the randomness of outputs:

- Lower values: More deterministic responses.

- Higher values: More creative responses.

Self-Consistency

Prompt the model multiple times with the same input and select the most consistent response.

Regex Checks

Ensure the output respects a specific format, like checking for URLs or ensuring JSON compliance.

Constrained Sampling

Blacklist specific words to control the vocabulary of the model during generation. This requires direct access to the model, such as using llama.cpp with open-weight models like Llama 3.

Tool (Function) Calling

Enable LLMs to generate outputs formatted as valid JSON or to provide arguments for function calls in valid JSON format.

Research Insight

A study by MIT demonstrated that using self-consistency in prompts can improve response accuracy by 20%. [MIT AI Lab, 2023]

Example Use Case

Combining temperature adjustment and self-consistency:

Prompt: "Generate 2 posts about a AI agents."

Set temperature: High for creativity.

Self-consistency: Generate multiple versions and select the best one.

By experimenting with these approaches and understanding the trade-offs between creativity, accuracy, and structure, you can effectively tailor your interactions with AI models to your specific needs.

The Strategic Importance of Structured Prompting

Understanding prompting extends beyond crafting effective questions—it's about unlocking AI's full potential for your business. Jutsu emphasizes delivering a superior user experience by providing tailored environments to meet your specific needs. Structured prompts can lead to significant improvements in AI performance across various tasks, making prompt engineering a crucial skill for maximizing your AI investments.

Key Benefits:

- Efficient Operations: Well-structured prompts simplified tasks and enhance operational efficiency.

- Enhanced Decision-Making: Accurate prompts enable better data analysis and insights, leading to informed decisions.

- Innovative Product Development: Effective prompts drive innovation by leveraging AI to create new solutions.

Diverse Prompting Techniques for Varied Applications

The research identifies 58 different text-based prompting techniques, along with additional multilingual and multimodal techniques. This diversity offers a rich toolkit for addressing various business challenges. Some key categories include:

- In-Context Learning: Allows AI models to adapt to specific tasks without extensive retraining.

- Zero-Shot Prompting: Enables AI to perform tasks without prior examples, increasing flexibility.

- Thought Generation: Guides AI to break down complex problems into manageable steps.

- Decomposition: Helps in tackling large, complex tasks by breaking them into smaller subtasks.

- Ensembling: Combines multiple AI outputs to improve accuracy and reliability.

Understanding these prompting techniques empowers your leadership team to select the most appropriate approach for each use case, potentially resulting in more effective GenAI tool implementations and better business outcomes.

Multilingual and Multimodal Capabilities

The research study also explores prompting techniques beyond English text, including multilingual and multimodal applications. This is particularly relevant for global organizations or those dealing with diverse data types.

- Multilingual Prompting: Helps break down language barriers, enabling more efficient global operations and expanding market reach worldwide.

- Multimodal Prompting: Incorporates various data types such as images, audio, and video, opening up new possibilities for GenAI applications in areas like content creation, quality control, and customer service.

Practical Applications: Lessons from Case Studies

The research paper includes two insightful case studies that demonstrate the practical application of GenAI prompting techniques:

- Benchmark Testing: Various prompting techniques were tested against the MMLU benchmark, providing insights into their relative effectiveness. This approach can be adapted to evaluate and optimize AI performance for specific business use cases.

- Real-World Application: A detailed exploration of prompt engineering was conducted to identify signals of frantic hopelessness in support-seeking individuals' text. This case study illustrates how carefully crafted prompts can be used to extract valuable insights from unstructured data, with potential applications in areas such as customer sentiment analysis or risk assessment.

These case studies highlight the importance of experimentation and iterative refinement in developing effective GenAI prompting strategies for your organization’s unique needs.

Resources & References

- OpenAI Research Paper on Detailed Prompts:

- Study showing that detailed prompts increase response relevance by up to 30%.

- OpenAI Research Paper, 2023

- Anthropic's Generate a Prompt:

- Tool that helps in crafting more detailed and clear prompts.

- Anthropic's Generate a Prompt

- Cohere’s Prompt Tuner:

- Tool that optimizes your initial prompts for better outcomes.

- Cohere’s Prompt Tuner

- MIT AI Lab Study on Self-Consistency:

- Research showing that self-consistency in prompts can improve response accuracy by 20%.

- MIT AI Lab, 2023

Example Implementations

- llama.cpp:

- A framework for applying constrained sampling with open-weight models like Llama 3.

- llama.cpp GitHub

In conclusion, mastering the art of prompting involves understanding the fundamentals, experimenting with advanced techniques, and optimizing outputs to achieve desired results. Try using Jutsu for a better user experience that is tailored to your needs.

Thanks for reading!