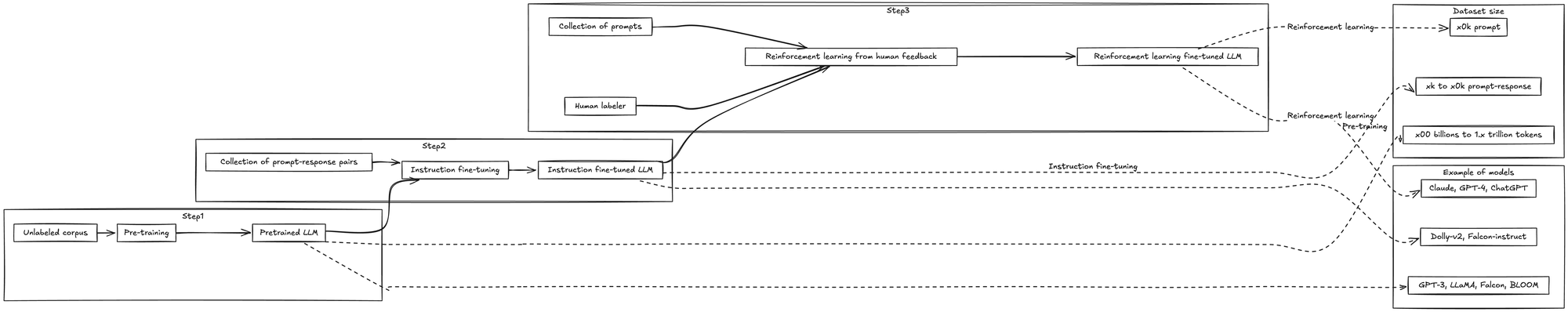

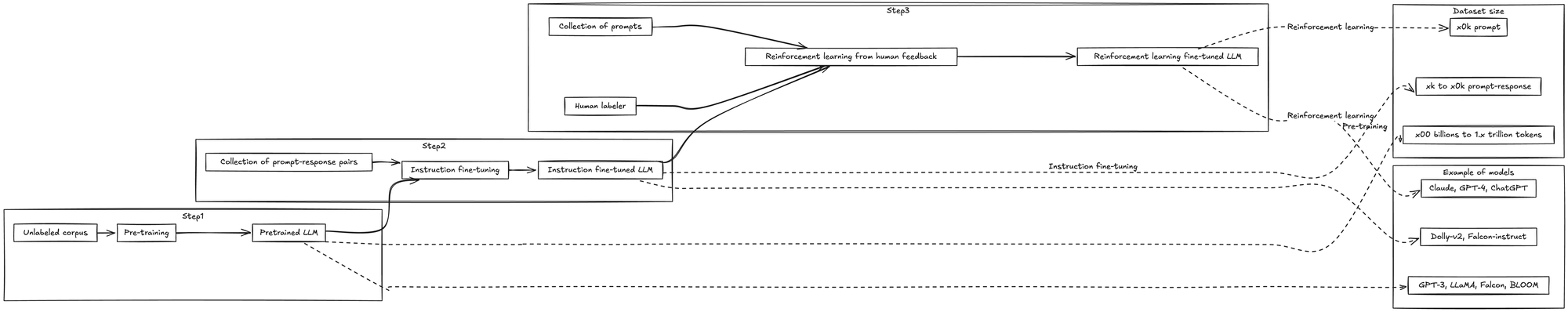

The Three Phases of Training Large Language Models (LLMs)

Large Language Models (LLMs) are revolutionizing artificial intelligence, enabling applications that range from chatbots to content generation. Their ability to understand and generate human-like text is the result of a sophisticated training process. In this blog post, we will explore the three intuitive phases of LLM training: language pre-training, instruction fine-tuning, and reinforcement learning.

1. Language Pre-Training

Language pre-training is the foundational phase in which LLMs learn the basic structures and nuances of language. This phase is crucial as it forms the basis for all subsequent tasks the LLM will perform.

Key Aspects:

- Data Gathering: Huge datasets are collected from various sources, including books, articles, and websites. These datasets are diverse to cover different writing styles and contexts.

- Masked Language Modeling (MLM): This technique involves masking certain words or phrases in a sentence and having the model predict the missing words. This helps the model understand the context and structure of the language.

- Autoregressive Training: Another technique where the model predicts the next word in a sequence, helping it learn to generate coherent sentences.

- Understanding Nuances: By predicting missing words, the model learns grammar, syntax, idioms, and other language nuances, enabling it to generate meaningful text.

Example: During pre-training, an LLM might receive the sentence, “The quick brown fox jumps over the _ dog,” with the task of predicting the missing word “lazy.” This repetitive process allows the model to understand and internalize language patterns.

2. Instruction Fine-Tuning

Once the LLM has a solid understanding of language, it moves to instruction fine-tuning. This phase involves training the model to perform specific tasks by following given instructions.

Key Aspects:

- Task-Specific Instructions: The model receives different types of instructions, such as “Translate this sentence into French” or “Summarize this news article.”

- Supervised Learning: The model is provided with both instructions and the correct responses, enabling it to learn through examples.

- Parameter Tuning: The model generates responses, compares them with the expected outcomes, and updates its neural network parameters to improve accuracy and relevancy.

Example: An LLM could be fine-tuned for customer support by providing it with a dataset containing common customer queries and their ideal responses. For instance, the instruction “How can I reset my password?” would be paired with a detailed but concise answer.

Practical Use Case: Chatbots in customer service scenarios often utilize fine-tuned LLMs to provide responses that are both accurate and helpful, enhancing user satisfaction.

3. Reinforcement Learning

Even after instruction fine-tuning, LLMs can generate outputs that might be correct but not particularly engaging or user-friendly. Reinforcement learning fine-tunes the model further by aligning it with human preferences.

Key Aspects:

- Reward Mechanism: Responses generated by the LLM are scored based on how well they align with human preferences. Higher rewards are given to responses that are preferred by users.

- Policy Gradient Methods: These methods help the model understand which actions (responses) lead to higher rewards. This feedback loop enables the model to refine its responses continuously.

- Human-in-the-Loop: Often, human reviewers rate the quality of responses, providing valuable feedback that the model uses to improve.

Example: A LLM designed to write creative stories might initially produce dry, factual narratives. Through reinforcement learning, it receives feedback that stories with imaginative twists and rich descriptions receive higher rewards. The model then adapts, crafting more engaging and creative stories over time.

Practical Use Case: Personalized content recommendation systems use reinforcement learning to suggest articles, videos, or products that users are more likely to enjoy based on their past interactions and feedback.

Conclusion

The journey of Large Language Models (LLMs) from language pre-training and instruction fine-tuning to reinforcement learning equips them with the skills needed to understand and generate meaningful text. This three-phase training process ensures that LLMs are not only linguistically capable but also proficient in performing tasks and generating responses that meet human preferences.

As LLM technology continues to advance, their potential applications will expand, offering incredible benefits and transforming how we interact with technology.