Introducing OpenAI’s o1-preview: The Next Big Leap in AI Reasoning

OpenAI has just announced a completely new model series and dropped the familiar GPT branding. Say hello to the new “01” series. This move hints at a significant shift, and it might just be the mysterious OpenAI strawberry hype that had everyone buzzing. So, what's new with 01?

What's in the 01 Series?

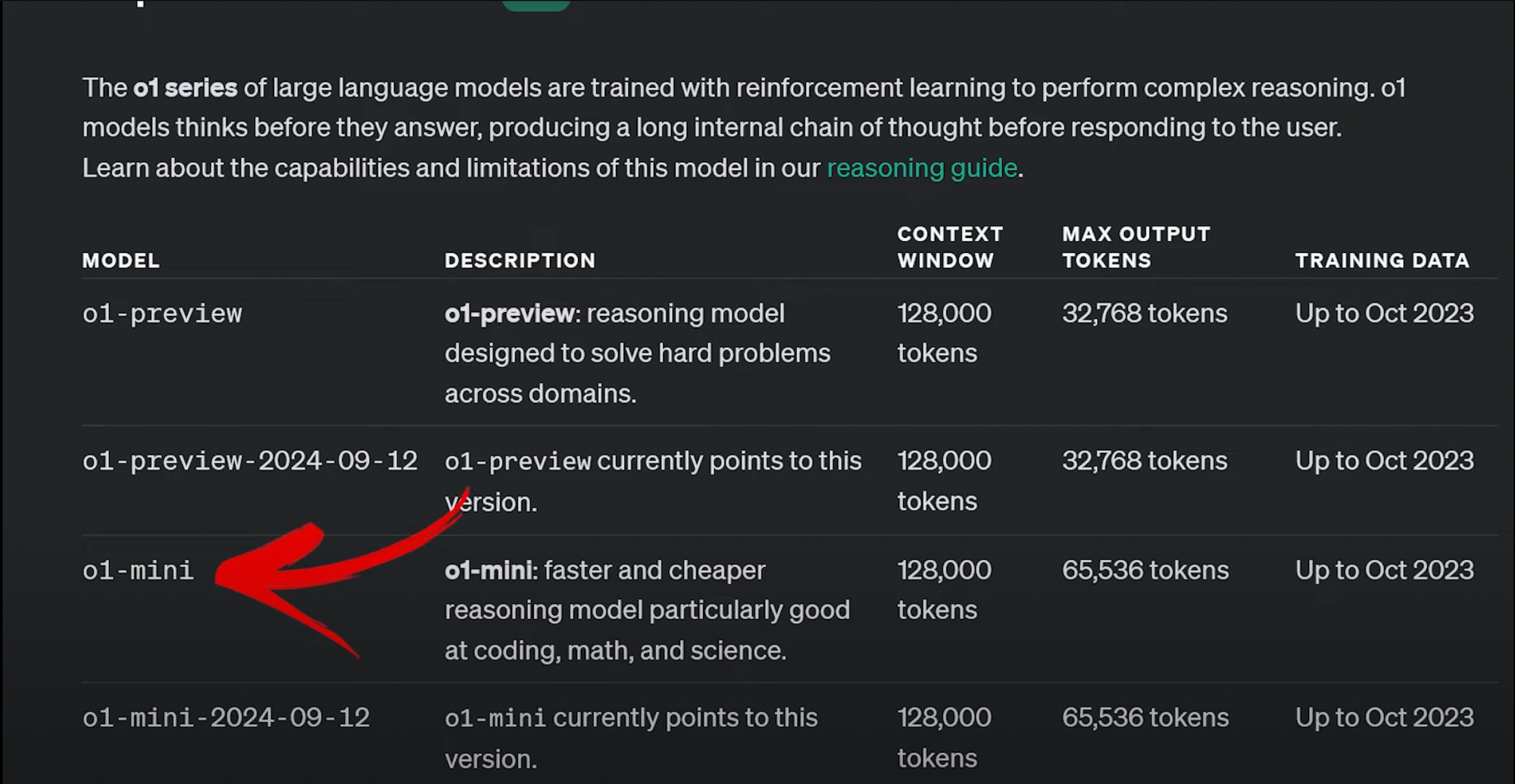

The 01 model series includes two key versions: 01 Preview and 01 Mini, a more affordable alternative. Both models offer a 128k context window, but here’s where it gets interesting:

- 01 Preview: 3 to 4 times pricier than GPT-4 but boasts groundbreaking performance in logical reasoning tasks.

- 01 Mini: Slightly more affordable and designed for cost-effective use.

Why is 01 Preview Special?

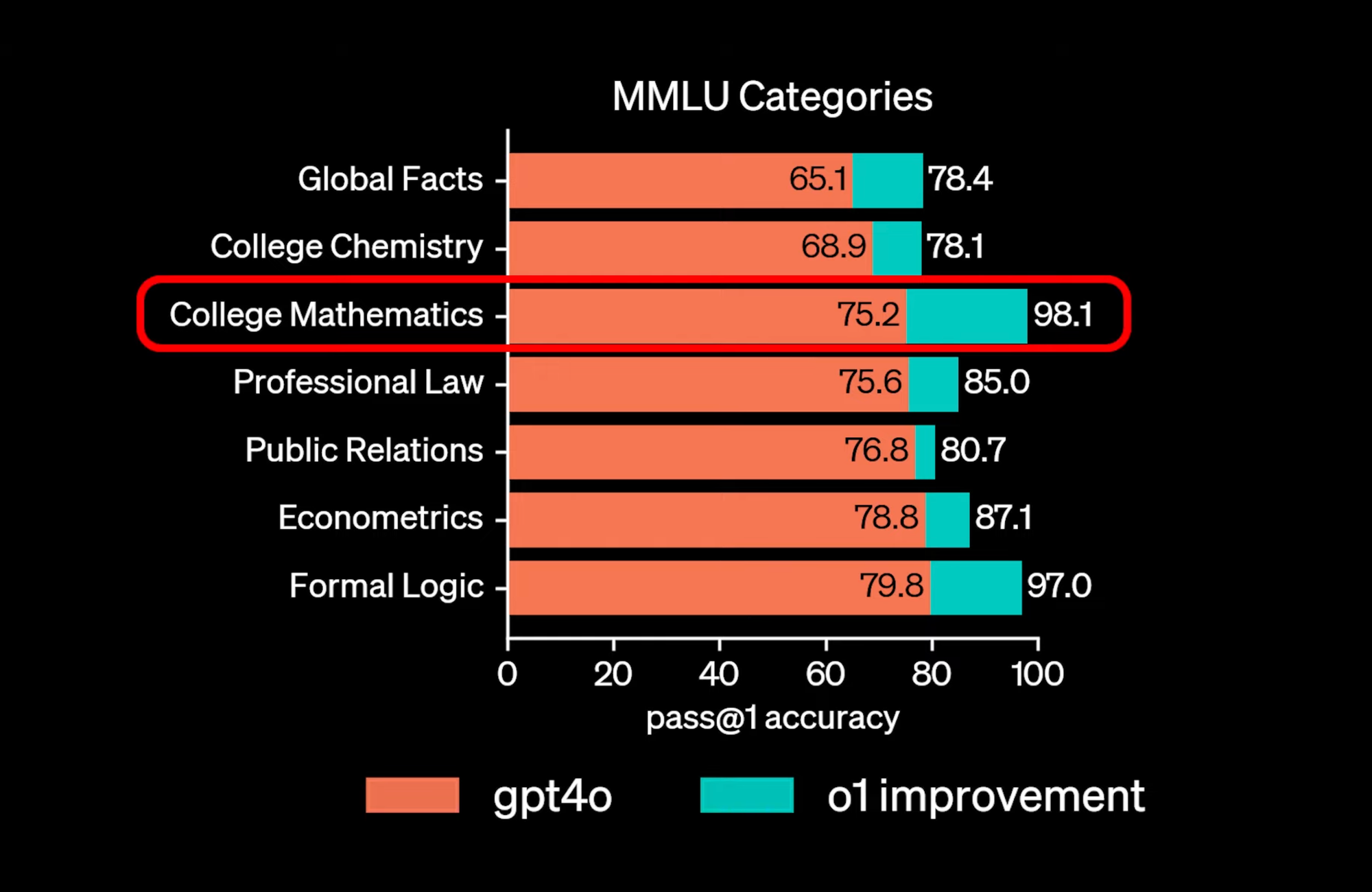

01 Preview is noticeably slower, taking 20 to 30 seconds to generate answers, but this increased time is offset by its stunning performance. It excels in scientific domains like physics, chemistry, and biology. Here’s a glimpse at its abilities:

- International Mathematics Olympiad: GPT-4 previously managed to solve only 13% of problems, while the unreleased 01 model scored a staggering 83%. The currently available 01 Preview scored 56%, marking a 43% jump.

- Formal Logic: Accuracy jumped from 80% under GPT-4 to an impressive 97%.

However, this model isn’t an all-in-one solution. In areas like English literature, the improvements were much less significant. So, while this isn't AGI (Artificial General Intelligence), it's a big step forward for specialized reasoning tasks.

The Magic Behind 01

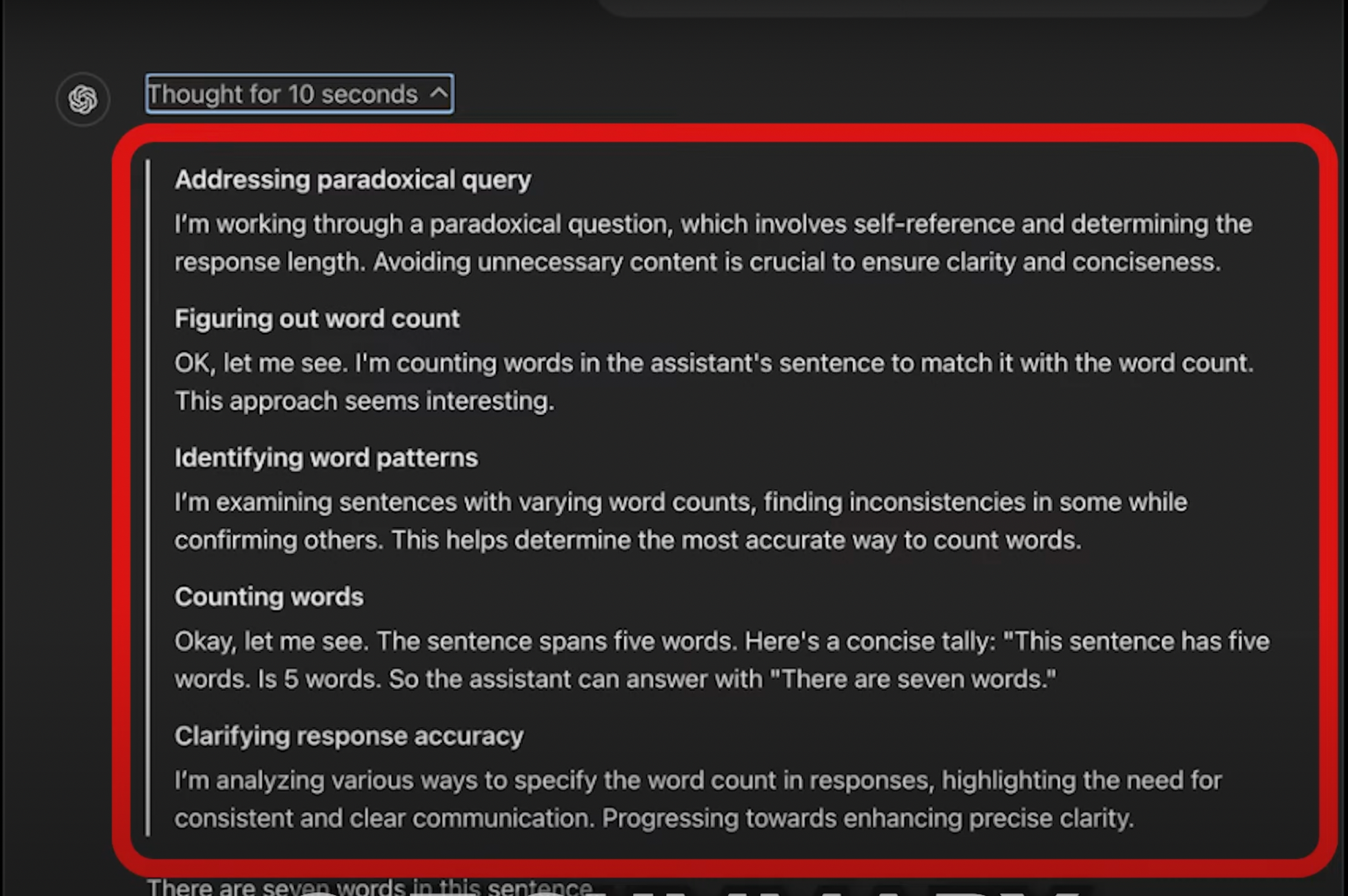

So, how did OpenAI achieve this? They haven’t disclosed all the details, but they did provide a broad outline. The magic lies in a combination of reinforcement learning and something called “chain of thought.” This process allows the model to reflect, plan, and improve its answers before presenting them to you. It's as though the model is thinking before it speaks, and this thought process is baked into its training, meaning more consistent results and fewer errors.

One fascinating element is the idea that 01's private chain of thought involves a huge token generation process—potentially upwards of 100,000 tokens per query. This resource-intensive method is part of why the model is currently limited to paid users, with a 30-message-per-week cap. While these limitations might sound extreme, they highlight the shift in AI focus from pure training time to "inference time scaling," where models can think longer and perform better.

What’s Next for 01?

For OpenAI, this represents a new way to scale AI—using more compute during inference rather than only during pre-training. Researchers are now exploring how much further they can push this concept. They’re testing to see if models that think for hours, days, or even weeks can achieve even better results.

While some may argue that this is just fine-tuning on top of chain-of-thought data, OpenAI has refined its data synthesis techniques to push beyond what we've seen before. This model isn’t just using more data; it's using higher-quality synthetic datasets. However, we still need to see how 01 Preview performs in real-world applications, and there's always the possibility that OpenAI has optimized the model too much for benchmarks (what some call “evaluation maxing”).

Wrapping It Up

As more people get access to 01 Preview, we’ll get a better idea of how it functions outside of benchmarks. For now, the early results are incredibly impressive, even if we still have a long way to go before reaching true AGI.

If you're curious, we will be diving deeper into 01's performance in the coming weeks and providing a detailed breakdown of its architecture and real-world applications. You can also stay up to date by following our newsletter, where we cover the latest advancements in AI, machine learning, and inference techniques. Don’t miss out!

Stay curious and keep innovating! 🌟